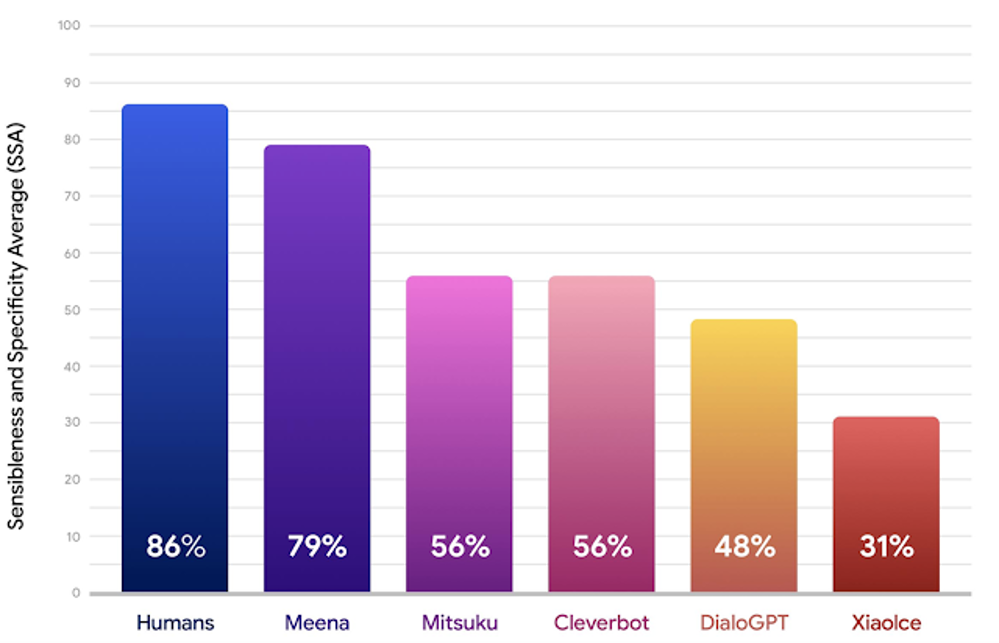

Google just presented Meena, an end-to-end, neural conversational model that learns to respond sensibly to a given conversational context. Meena can conduct conversations that are more sensible and specific than existing state-of-the-art chatbots, according to Google’s blog post. Such improvements are reflected through a new human evaluation metric that Google proposes for open domain chatbots, called Sensibleness and Specificity Average (SSA), which captures basic, but important attributes for human conversation.

Google just presented Meena, an end-to-end, neural conversational model that learns to respond sensibly to a given conversational context. Meena can conduct conversations that are more sensible and specific than existing state-of-the-art chatbots, according to Google’s blog post. Such improvements are reflected through a new human evaluation metric that Google proposes for open domain chatbots, called Sensibleness and Specificity Average (SSA), which captures basic, but important attributes for human conversation.

Automatic Metric: Perplexity

Google demonstrates that perplexity, an automatic metric that is readily available to any neural conversational models, highly correlates with SSA. The training objective is therefore to minimize perplexity, the uncertainty of predicting the next token (in this case, the next word in a conversation). At its heart lies the Evolved Transformer seq2seq architecture, a Transformer architecture discovered by evolutionary neural architecture search to improve perplexity.

According to Google, the Meena model has 2.6 billion parameters and is trained on 341 GB of text, filtered from public domain social media conversations. Compared to an existing state-of-the-art generative model, OpenAI GPT-2, Meena has 1.7x greater model capacity and was trained on 8.5x more data.

Mastered Challenges

“Researchers have long sought for an automatic evaluation metric that correlates with more accurate, human evaluation. Doing so would enable faster development of dialogue models, but to date, finding such an automatic metric has been challenging”, Google states, being the more proud about the discovery that perplexity, an automatic metric that is readily available to any neural seq2seq model, exhibits a strong correlation with human evaluation, such as the SSA value.

Perplexity measures the uncertainty of a language model: the lower the perplexity, the more confident the model is in generating the next token (character, word, or sub word). Conceptually, perplexity represents the number of choices the model is trying to choose from when producing the next token.

“During development, we benchmarked eight different model versions with varying hyperparameters and architectures, such as the number of layers, attention heads, total training steps, whether we use Evolved Transformer or regular Transformer, and whether we train with hard labels or with distillation: the lower the perplexity, the better the SSA score for the model, with a strong correlation coefficient (R2 = 0.93)’, Google explains, adding; “Our best end-to-end trained Meena model, referred to as Meena (base), achieves a perplexity of 10.2 (smaller is better) and that translates to an SSA score of 72%. Compared to the SSA scores achieved by other chabots, our SSA score of 72% is not far from the 86% SSA achieved by the average person. The full version of Meena, which has a filtering mechanism and tuned decoding, further advances the SSA score to 79%.”

Future Research

Future Research

Last but not least, Google promises to continue working on lowering the perplexity of neural conversational models through improvements in algorithms, architectures, data, and compute, stating: “While we have focused solely on sensibleness and specificity in this work, other attributes such as ‘personality’ and ‘factuality’ are also worth considering in subsequent works. Also, tackling safety and bias in the models is a key focus area for us, and given the challenges related to this, we are not currently releasing an external research demo. We are evaluating the risks and benefits associated with externalizing the model checkpoint, however, and may choose to make it available in the coming months to help advance research in this area. (Source: Google Blog)

By Daniela La Marca